THE IMAGE ANALYSIS ALGORITHM

The algorithm is designed with real-time operation in mind, “rastering” the image once per frame and working in greyscale. The image analysis algorithm can be subdivided into image treatment and analysis processes. Further information can be extracted through learning techniques. The MANO controller has been programmed in the Pure Data and GEM environments with several custom made externals.

1. Image Treatment

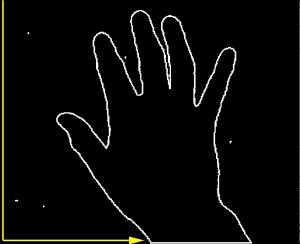

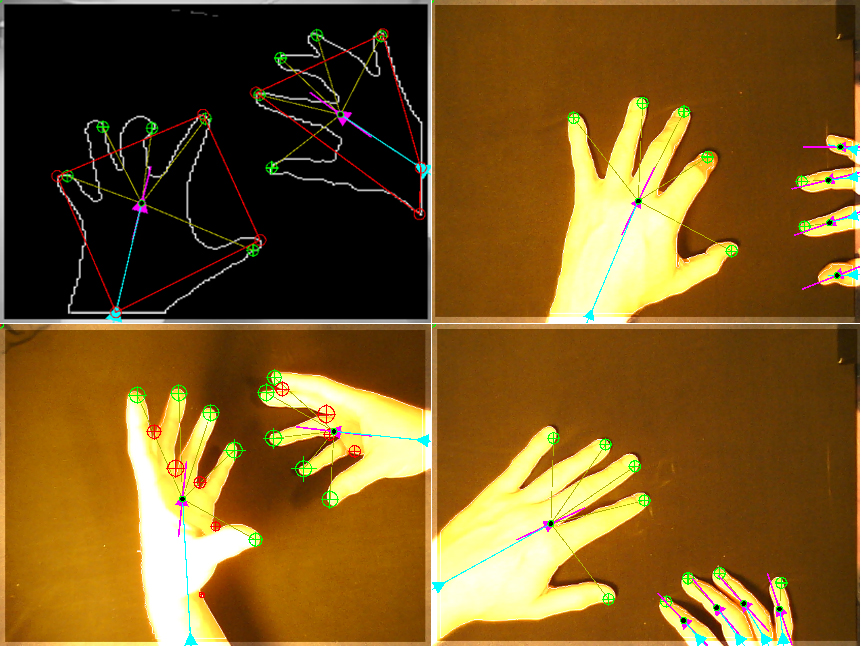

The image is rastered once performing a contrast algorithm based on a threshold and an edge detection algorithm. In the contrast algorithm, if a pixel is more than the threshold, it becomes white and if less, black. The edge detection algorithm finds the edge of the white figure. The edge of each independent hand or finger is then white and the rest of the image black as seen in Fig. 1.

2. Analysis

After the image treatment process, the algorithm looks for the biggest entry section. That is, the longest white section in the image border, calculating its center and size.

This center becomes the entry point parameter and the size, the entry size parameter as shown in Fig. 1. This means that only white sections that touch the image border are analyzed.

The algorithm then follows the trace of the edge of the hand so that it forms a single, closed line of continuous pixels, pruning any deviations (caused by hairs and other noise elements). The pixel coordinates of this line are and stored in arrays. The number of pixels in these arrays is the perimeter parameter.

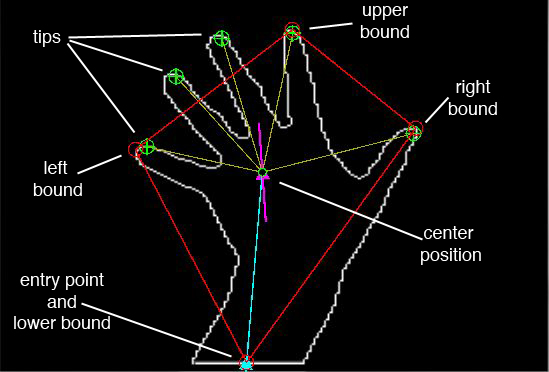

The center position is the average of all pixels in the perimeter and is shown in Fig. 1 . An estimate of the general direction of the hand is also calculated based on the position of the central third of points in the perimeter.

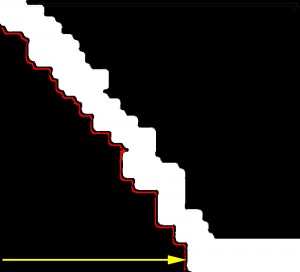

The perimeter arrays are then sampled every n pixels to obtain partial pixel coordinates, shown in Fig. 2 as squares on the perimeter. Each successive pair of sampled pixels forms a vector with a direction. As shown in Fig. 2 the change in direction of successive vectors determines an an- gle that represents the curvature of the perimeter (angle array). We deduce that fingertips will create peaks in the angle array and finger valleys will create valleys. These positive and negative peaks become the tip and valley parameters. Tips are shown in Fig. 1 as pointers at the ends of fingers.

Tips are presented both as vectors from the center position to the tip or as an independent location. The first method is dependent on the center position while the latter is independent form it.

3. Further Learning

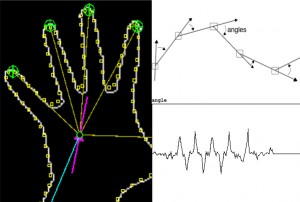

The image analysis section is iterative and so it can find several hands, as shown in the first frame of Fig. 3 and Fig. 4. In Fig. 3, it is clear that we are seeing four fingers and not one hand. It is necessary to distinguish fingers from fingertips. The “learning” portion of the algorithm is concerned with classifying a white mass that enters the frame into fingers and hands.

As we can see in the next frame of Fig. 3, as the hand continues to enter the frame, the four fingers that were de- tected independently now form part of the same shape. This bigger shape is now classified as a hand and the thumb, as a separate shape, is classified as a finger.

Other tasks of the “learning” section are to find the best tracks for parameters so that multiple hands/fingers and fingertips are treated independently and continuously.

Several discrete features can be extracted from continuous data. When a mass appears or disappears we obtain triggers (onsets and releases). When dealing with continu- ous parameters changes in direction can also be detected.

4. Other considerations

As shown in Fig. 4, hands and fingers can enter from each of the four sides of the frame. This allows the performer to access different mappings depending on how they enter the frame.

As a multi-hand/finger controller, parameters between multiple elements can be calculated. For example, a particular mapping might be assigned to a set of parameter differences between a hand coming in from below and one from the side.